Google Unveils Gemini 1.5 Pro: Bigger Context, Smaller Footprint, Taking Aim at GPT-4

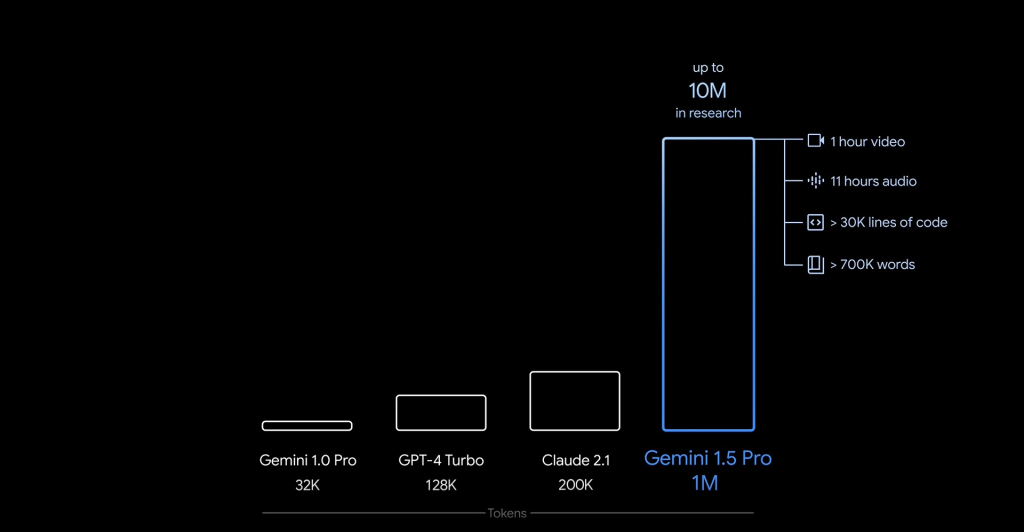

Hot on the heels of Gemini 1.0 Ultra (formerly Bard), Google is throwing down the gauntlet with another contender in the AI language model arms race: Gemini 1.5 Pro. This new model boasts a game-changing context window – a staggering 1 million tokens, eclipsing rivals like GPT-4 Turbo (128K) and Claude 2.1 (200K).

While Gemini 1.0 models have a respectable 32K token context window, 1.5 Pro goes all-in, allowing it to process massive amounts of information. Think 700,000 words, an hour of video, 11 hours of audio, or 30,000-line codebases – all in one go!

But wait, there’s more! Despite this information overload, 1.5 Pro delivers performance comparable to the beefier 1.0 Ultra, and that’s thanks to its secret weapon: Mixture-of-Experts (MoE) architecture.

Imagine a team of specialists, each excelling in a specific task. That’s the MoE approach. Instead of a single, monolithic model, 1.5 Pro utilizes multiple, smaller experts, activated based on the task at hand. This makes it efficient and effective, tackling diverse challenges with aplomb.

Google put 1.5 Pro’s mammoth memory to the test with the “Needle in a Haystack” challenge. The model successfully recalled the target information a whopping 99% of the time, a feat previous Gemini models couldn’t achieve.

Early Access and What’s Next:

Currently, 1.5 Pro is in developer preview, accessible through AI Studio and Vertex AI. If you’re a developer or enterprise customer, you can join the waitlist for free testing.

This is a significant step forward for Google in the AI language model game. With its groundbreaking context window and efficient MoE architecture, 1.5 Pro has the potential to challenge the dominance of players like GPT-4. As testing progresses and broader access becomes available, the true impact of this innovative model will unfold. Stay tuned for further updates and comparisons as we delve deeper into its capabilities.