Google DeepMind has introduced Genie 3, a groundbreaking AI model designed to generate interactive 3D environments from a single input image—no labeled data or supervision required. This leap forward in world modeling could reshape the way games, simulations, and virtual environments are built, moving creation from manual coding to rapid, AI-powered synthesis.

Unsupervised Training on a Massive Scale

Genie 3 stands out for its unsupervised learning approach. Rather than relying on hand-annotated datasets, DeepMind trained the model solely on video clips and their action traces gathered from the open web. The dataset is enormous: 30 million video clips with corresponding user actions—one of the largest such collections ever used for this type of AI training.

From a Single Image to a Playable 3D Game

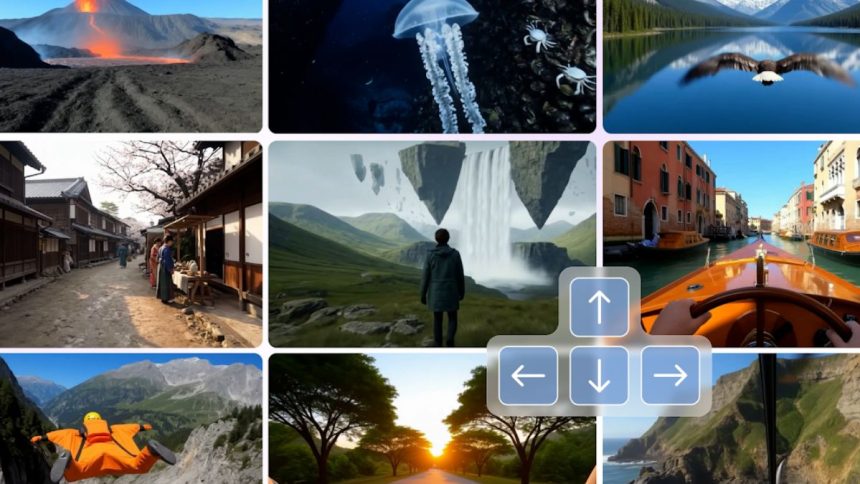

Users engage with Genie 3 by uploading any image—hand-drawn, rendered, or real-world photographs. The model performs the following:

- Spatial Extraction: Detects the layout and key objects in the image.

- Latent Action Modeling: Deduces how movement or interaction could work in this environment.

- Dynamic Simulation: Generates a playable, interactive 3D scene, controlled like a classic side-scrolling game.

All motions, character controls, and simulated physics are managed by Genie 3’s built-in representations, without relying on a game engine. This enables real-time interaction with entirely AI-generated environments.

Key Model Architecture

The Genie 3 pipeline features:

- Spatiotemporal Video Tokenizer: Breaks down video frames to learn visual and temporal structure efficiently.

- Latent Action Model: Learns what types of actions (movements, interactions) are possible from video/action pairings, forming a compressed, transferable “action space”.

- Dynamics Model: Predicts what will happen next (frames, rewards, actions).

- Renderer: Converts tokens and predictions back into video, producing a lifelike, scrollable gameplay experience.

All of these components are trained in an end-to-end manner from internet video, with no reliance on conventional game code.

Current Limitations

Genie 3, while impressive, is still a prototype and has clear limitations:

- 2D Side-Scrolling Constraint: The generated worlds are 2D sidescrollers—not yet true free-form 3D.

- Fixed Character Movement: Character movement is limited to left/right, lacking detailed action complexity.

- No Long-term Memory/Planning: The model doesn’t track long-term objectives or sophisticated planning strategies across time.

- Visual Fidelity Can Vary: Out-of-distribution (unusual) images can result in lower-quality scenes.

DeepMind stresses that Genie 3’s main value today is as a research tool, revealing what’s possible in controllable environment learning from passive video data.

Availability

Genie 3 is currently accessible as a limited research preview via a public web demo. DeepMind has hinted that, pending positive feedback and further safety screening, wider access could follow.

Genie 3 represents a major milestone in AI-driven environment generation, opening doors to new creative workflows in gaming, education, simulation, and beyond. While still in its early days, it demonstrates how unsupervised AI can turn the world’s visual experience—captured in everyday videos—into interactive digital realities.