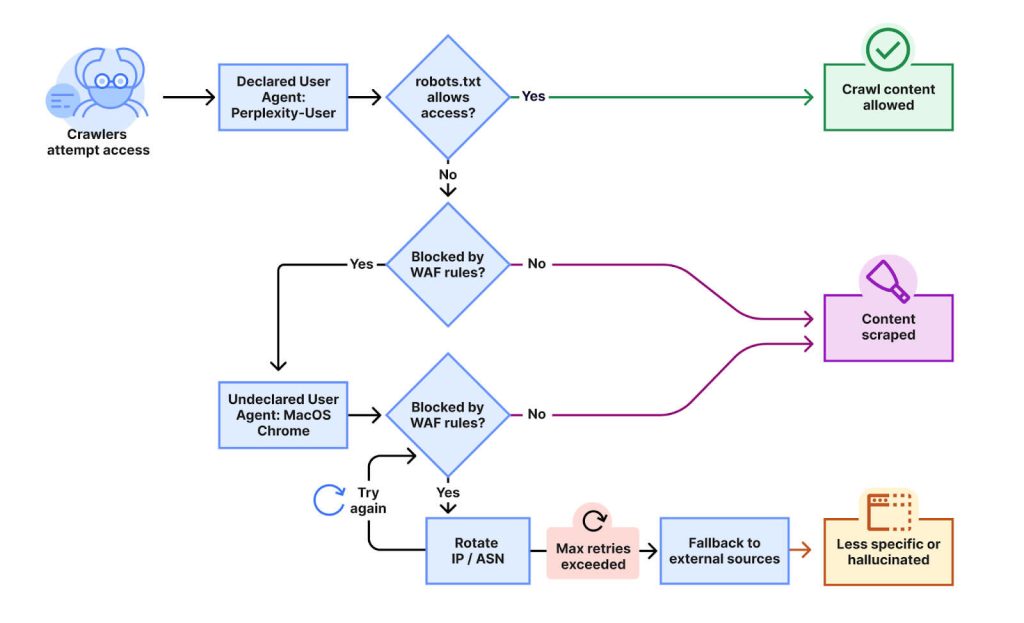

Perplexity, an AI-powered search platform, has been accused by Cloudflare of using stealth web crawlers to bypass website restrictions designed to block them. According to a recent Cloudflare report, Perplexity initially uses its declared bots, such as “PerplexityBot” and “Perplexity-User,” but when blocked by robots.txt files or Web Application Firewalls (WAF), it switches to disguising its crawlers as generic browsers like Google Chrome on macOS. Additionally, Perplexity rotates through unlisted IP addresses and uses multiple autonomous system numbers (ASNs) to evade detection, enabling it to access content on sites that explicitly prohibit crawling.

Cloudflare’s investigation involved creating new websites that blocked Perplexity’s known bots and forbade crawling via robots.txt. Despite these measures, Perplexity was still able to retrieve and summarize content from these protected sites, demonstrating “stealth crawling” tactics. Cloudflare has consequently removed Perplexity from its verified bots list and implemented measures to block such covert crawling.

Perplexity responded by dismissing the allegations as a “publicity stunt,” arguing that their AI agents access websites only in response to specific user queries to provide up-to-date answers and do not store content for training. The company also questions labeling AI-assisted content retrieval as malicious, cautioning against criminalizing automated tools essential for internet functionality.

This ongoing tension highlights the challenges AI companies face in sourcing current web data while balancing website owners’ rights and technical constraints. Cloudflare has emphasized that its own bots, such as those from OpenAI, comply with website rules, whereas Perplexity’s stealth approach raises concerns among publishers and infrastructure providers about transparency and respect for web content guidelines.

In response to this controversy, web infrastructure providers like Cloudflare are strengthening their bot detection and blocking capabilities to protect publishers’ content from unauthorized scraping, underscoring the complexity of regulating AI web crawling ethically and effectively.