OpenAI has launched two new open-weight AI language models, gpt-oss-120b and gpt-oss-20b, released under the permissive Apache 2.0 license, which allows developers to freely download, run, inspect, customize, and deploy the models without copyleft or patent restrictions. These models provide advanced reasoning and tool-use capabilities comparable to some of OpenAI’s proprietary models but with greater accessibility and flexibility for local and edge deployment.

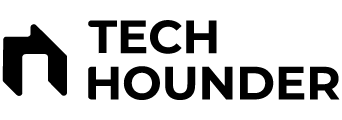

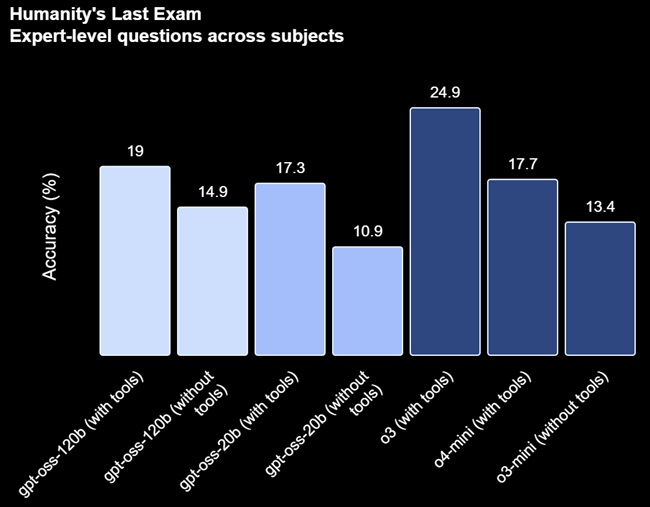

The gpt-oss-120b model has 117 billion parameters across 36 layers, activates 5.1 billion parameters per token using Mixture-of-Experts (MoE), and supports long context lengths up to 128k tokens. It requires around 80 GB of GPU RAM and matches or exceeds OpenAI’s o4-mini model on diverse benchmarking tests, including reasoning, health queries, math competitions, and coding tasks. The smaller gpt-oss-20b has 21 billion parameters in 24 layers, activates 3.6 billion parameters per token, runs efficiently on 16 GB GPUs (e.g., consumer laptops), and offers performance similar to the o3-mini model. Both models use modern Transformer techniques such as Rotary Positional Embeddings (RoPE), grouped multi-query attention, and sparse attention to optimize processing efficiency.

These models underwent supervised fine-tuning and reinforcement learning to enhance their chain-of-thought (CoT) reasoning and structured output capabilities. Users can adjust the reasoning effort (low to high) via system instructions, making them adaptable to a wide variety of use cases. OpenAI has integrated safety measures including pre-training data filtering (removing chemical, biological, nuclear hazardous content), adversarial fine-tuning, and refusal training to limit misuse, though chain-of-thought outputs remain unsupervised to ensure transparency but may include hallucinations.

A major innovation is on-device inference: Qualcomm’s Snapdragon platforms will support the gpt-oss-20b model for fully local AI reasoning on smartphones, PCs, XR headsets, and vehicles starting in 2025, enabling low-latency, private AI experiences without cloud dependency. The models are available via Hugging Face and integrations such as Ollama, which provide inference tools and prompt renderers, and are supported on platforms including Azure, AWS, and Databricks, as well as local deployment frameworks like llama.cpp.

OpenAI’s gpt-oss-120b and gpt-oss-20b models represent a major shift toward open-weight, high-performance language models designed for broad access, customization, and deployment flexibility, from powerful data center GPUs down to consumer laptops and edge devices. They unlock advanced reasoning, tool use, and chain-of-thought features while maintaining safety standards suitable for research and production use.